GPGPU Languages

The current list of high-level GPGPU languages stands at:- Accelerator from Microsoft

- Brook from Stanford University, USA

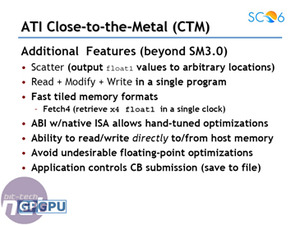

- CTM (Close To Metal) for AMD GPUs

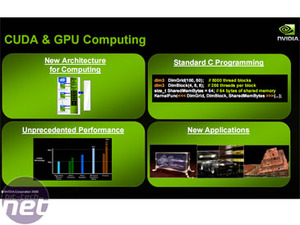

- CUDA for Nvidia GPUs

- Peakstream

- RapidMind

Accelerator, Brook, Sequoia, PeakStream and RapidMind are trying to help here, as their respective SDKs are designed to show off the GPU's high-performance computing capabilities, rather than its ability to run games at blistering frame rates. These are high-level syntaxes based around familiar languages like .NET, C and C++ and are usable on all GPUs.

On the other hand, CTM and CUDA are limited to GPU families from AMD and Nvidia. In our opinion, there's less chance that either of these will take off and be the de facto programming language for GPGPU. This is because they're not independent -- that's not to say the two initiatives won't receive industry support, because the respective technologies already are.

CTM is AMD’s “Close To Metal” approach to GPGPU, which allows you to get right down into the heart of its graphics processors. It's possible to control all of the GPUs basic elements, giving you direct control over the processors and memory. This allows application developers to get to the core of the hardware and optimise their code by hand allowing for large optimisations reaching the hardware's theoretical maximums.

Despite this though, CTM is still a high level language (like C, as opposed to a low level language like Assembler), and while still in its relative infancy it's possible to use AMD's CTM library to compile the apps in C/C++. Other more popular programming languages like Java aren't available for CTM yet, but it is on the cards for the future.

CUDA works in much the same way as CTM, but obviously for Nvidia hardware instead of ATI's GPUs. The CUDA compiler allows you to code in a high level language like C, using Nvidia's libraries. The SDK only works with Nvidia's current generation DirectX 10 GPUs (namely G80, G84 and G86), because CUDA was only implemented into the GeForce 8-series architecture -- we've heard nothing about whether previous Nvidia graphics processing units will be able to make use of CUDA, or other GPGPU languages for that matter.

While you may think that two separate instruction sets are opposite of what you’re used on a CPU, this is actually far from the truth. Even in the world of CPUs, you can get Intel, AMD, Microsoft, SSE2/SSE3/SSE4 optimised programs that work faster with specific CPU architectures. However, due to the constraints of the x86 architecture, there are always fall back mechanisms in place.

Intel's future graphics/CPU project codenamed "Larrabee" is tipped to be a many-core CPU part with specific graphics functions and is said to be tailored to being a floating point powerhouse. Since the graphics market is saturated by Nvidia and AMD, but the GPGPU world is relatively fresh, Intel can still make a break for it and get in while it's still early days.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.